4 Newest Technology Trends in Computer Science

It’s hard not to appreciate the speed and breadth of innovation in computer technology that’s occurred in the past decade. From the metaverse to self-driving vehicles, software developers, engineers, and scientists have poured time and resources into forward-looking trends in tech.

Computer science professionals must keep up with new technology trends. Obtaining a bachelor’s degree in computer science can provide hands-on experience with some of these technologies and spark ideas for their potential use in various industries.

What Are the Latest Computer Science Tech Trends?

Tech innovations have the potential to create new markets and industries. Playing a role in the latest tech trend, whether you work in software development or computer science, gives you the power to shape how society operates. Understanding the latest technologies is crucial for software developers to remain valuable and relevant, whatever their industry.

Here are four of the newest trends and their potential impacts.

Trend 1: Artificial Intelligence

Artificial intelligence (AI) is any technology that enables machines to operate logically and autonomously. The application of AI seems limitless: Any task that requires rational and precise decision-making would benefit. However, this technology is typically used to divert rudimentary tasks from humans to machines, freeing us to address more complex problems.

AI is already popular in customer service roles, in which chatbots and virtual agents handle customer questions and problems. AI is also used for natural language processing (NLP) in the healthcare industry, translating notes from clinicians and converting that data into an electronic medical record. AI is also becoming a popular consumer product, with more people using Siri, Alexa, and Google Assistant to perform menial household tasks such as ordering food deliveries or turning off lights.

Trend 2: Quantum Computing

Computer technology is all zeros and ones, isn’t it? Well, perhaps not for long. A computer science tech trend receiving massive funding, according to McKinsey & Company, seeks to replicate the qualities of subatomic particles in computer systems. Quantum computing bypasses the restrictive binary bit technology, which only allows a binary value of zero or one.

Instead, quantum computing uses subatomic measurements (qubits) that can have a value of zero, one, or both.

Once this technology is implemented, it will make computer processing power exponentially faster, while allowing hardware to be smaller. It can also increase the power and potential of machine learning and AI programs across a range of uses, from self-driving vehicles to automated trading.

Trend 3: Edge Computing

Thanks to the internet of things (IoT), companies are finding it difficult to analyze, interpret, and use the massive data they receive from their customers. Edge computing is an attempt to solve this problem by decentralizing data: transferring data from a centralized point to a point closer to its source. Cloud computing, for example, offers a centralized model of big data management that requires high-processing computer capabilities and strong cybersecurity.

Edge computing, on the other hand, decentralizes data, allowing data processing centers to dedicate bandwidth where it’s most crucial and lower data processing latency. In industries that rely on big data for routine operations (such as transportation and healthcare), edge computing is likely to facilitate more effective uses of the data being collected.

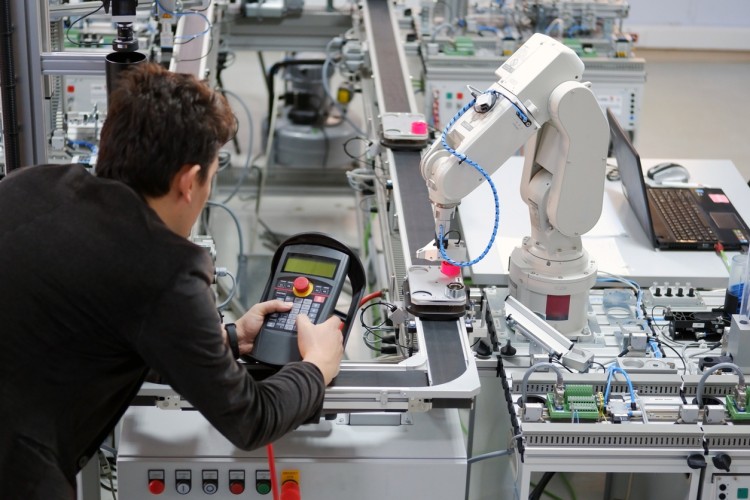

Trend 4: Robotics

Robotics is a branch of engineering in which machines are built to replicate human actions and behaviors. These “preprogrammed” robots have traditionally played a role in manufacturing, aiding in the production of vehicles and equipment. Capable of simple, monotonous tasks, they often rely on humans to instruct them when to start and end a task.

These days, autonomous robots (such as Roomba robotic vacuum cleaners or the Mars Exploration Rovers) are gaining popularity and can be found helping consumers and professionals, from law enforcement officers to climate scientists. Since robots are more accurate than humans and can perform processes indefinitely, they’re often used to increase workplace safety and efficiency. Robotics deals closely with AI because it uses material construction and software to accomplish its goals.

Become a Leader in a Bold Future

Machines are becoming more autonomous and sophisticated than ever before. Still, machines rely on human ingenuity and design to ensure their functionality. This means that the future will require an expert workforce of software developers who are up for the task.

Maryville University’s online Bachelor of Science in Computer Science program offers hands-on experience and includes a certificate in one of six tech fields: AI, blockchain, cybersecurity, data science, software development, or user experience/user interface (UX/UI).

If you’re interested in keeping up with the newest technology trends in computer science, take a look at some benefits of being a software development degree holder.

Recommended Reading

Modern Tech Careers: Software Engineer vs. Data Scientist

What Is the Future of Software Engineering?

Sources

Forbes, “Artificial Intelligence: The Future of Cybersecurity?”

IBM, Artificial Intelligence (AI)

Investopedia, Quantum Computing

McKinsey & Company, Quantum Computing: An Emerging Ecosystem and Industry Use Cases

Be Brave

Bring us your ambition and we’ll guide you along a personalized path to a quality education that’s designed to change your life.